The new architecture of Hackystat has me thinking about new metaphors for software engineering measurement. Indeed, it has me wondering if where we are heading is even characterized best as "measurement" or even "software engineering". Alistair Cockburn, for example, has written an article on

The End Of Software Engineering in which he challenges the use of the term "software engineering" as an appropriate description for what people do (or should do) when developing software.

Similarly, when we began work on Hackystat six years ago, I thought in fairly conventional terms about this system: it was basically a way to make it simpler to gather more accurate measures that could be used for traditional software engineering measurement activities: baselines, prediction, control, quality assessment.

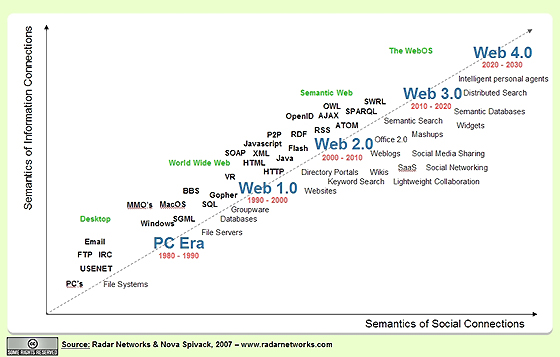

One interesting and unintended side effect of the Hackystat 8 architecture, in which the system becomes a seamless component of the overall internet information ecosystem via a set of RESTful web services, is a re-examination of my fundamental conceptions of what the system could and should be. In particular, two Web 2.0 concepts: "mashup", and "social network", provide interesting metaphors.

Measurement as MashupHackystat has always embraced the idea of "mashup". From the earliest days, we have pursued the hypothesis that there is a "network effect" in software process and product metrics; that the more orthogonal measures you could gather about a system, the more potential you would gain for insight as you obtained the ability to compare and contrast them. Thus, we created a system that was easily extensible with sensors for different tools that could gather data of different types.

Software Project Telemetry is an early result of our search for ways to obtain meaning within this network effect. In Software Project Telemetry, we created a language that enables users to easily create "measurement mashups" consisting of metrics and their trends over time. The following screen image shows an example mashup, in which we opportunistically discovered a covariance between build failures and code churn over time for a specific project :

Hackystat 8

Hackystat 8 creates new opportunities for mashups, because we can now integrate this kind of data with other data collection and visualization systems. As one example, we are exploring the use of

Twitter as a data collection and communication mechanism. Several members of the Hackystat 8 development group "follow" each other with Twitter and post information about their software development activities (among other things) as a way to increase awareness of project state. Here's a recent screen image show some of our posts:

There are at least two interesting directions for Twitter/Hackystat mashups. Assuming that members of a project team are twitter-enabled, we can provide a Hackystat service that monitors the data being collected from sensors and sends periodic "tweets" that answer the question "What are you doing now?" for individual developers and/or the project as a whole. Going the other direction, we can gather "tweets" as data that we can display on a

Simile/Timeline with our metrics values, which provides an interesting approach to integrating qualitative and quantitative data.

A second form of mashup is the use of internet-enabled ambient devices such as

Ambient Orbs or

Nabaztag. The idea here is to get away from the use of the browser (or even the monitor) as the sole interface to Hackystat information and analyses. Instead, we could move toward

Mark Weiser's vision of calm technology, or ""that which informs but doesn't demand our focus or attention".

The net of all this is that Hackystat is evolving from a kind of "local" capability for mashups represented by software project telemetry to a new "global" capability for mashups in which Hackystat can act as a first class citizen of the internet information infrastructure.

Software development as social networkGoogle is releasing an API for social networking called

OpenSocial. This API essentially enables you to (a) create profiles of users; (b) publish and subscribe to events, and (c) maintain persistent data. You can use Google Gears to maintain data on client computers, and thus create more scalable systems. Google intends this as a way for developers to create third party applications that can run within multiple social networks (MySpace, Orkut), as well as enable users to maintain, transfer, and/or integrate data across these networks.

So. What would Hackystat look like, and what would it do, if it was implemented using OpenSocial?

First, I think that in contrast to the current analysis focus of Hackystat, in which the concept of a "project" as an organizing principle is very important, in an OpenSocial world you might not be so interested in a project-based orientation for analyses. Instead, I think the emphasis would be much more on the individual and their behaviors across, and independent of, projects.

For example, your Hackystat OpenSocial "profile" might include analysis results like: "I worked for three hours hacking Java code yesterday", or "I have a lot of experience with the Java 2D API", or "I use test driven design practices 80% of the time". All of these might be interesting to others in your social network as a representation of what you are doing currently and/or are capable of doing in future. The process/product characteristics of the projects that you work on might be less important in an OpenSocial profile for, I think, two reasons: (a) it is harder to understand the individual's contributions in the context of project-level analyses; and (b) project data might "give away" information that the employer of the developer does not want published.

Which brings me to a second conjecture: issues of data privacy or "sanitization" will become much more important for social network software engineering using a system like OpenSocial. To make the example analyses I listed above, it must be possible to collect detailed data about your activities as a developer (sufficient, for example, to infer TDD behaviors), yet publish them at an abstract enough level that no proprietary information is being revealed. That is a fascinating trade-off that will require a great deal of study and research. The implications are both technical and social.